Apache Airflow comes with an extensive set of operators and the community provides even more.

These are the building blocks used to construct tasks. Working with operatorsĬonceptually, an operator is a predefined template for a specific type of task. The tasks are arranged in DAGs in a manner that reflects their upstream and downstream dependencies. In Apache Airflow, a task is the basic unit of work to be executed. This means that one DAG will have a new run each time that it is executed as defined by the schedule interval. Each DAG is instantiated with a start date and a schedule interval that describes how often the workflow should be run (such as daily or weekly).Ī DAG is a general overview of the workflow and each instance that the DAG is executed is referred to as a DAG run.

#Data apache airflow series insight how to#

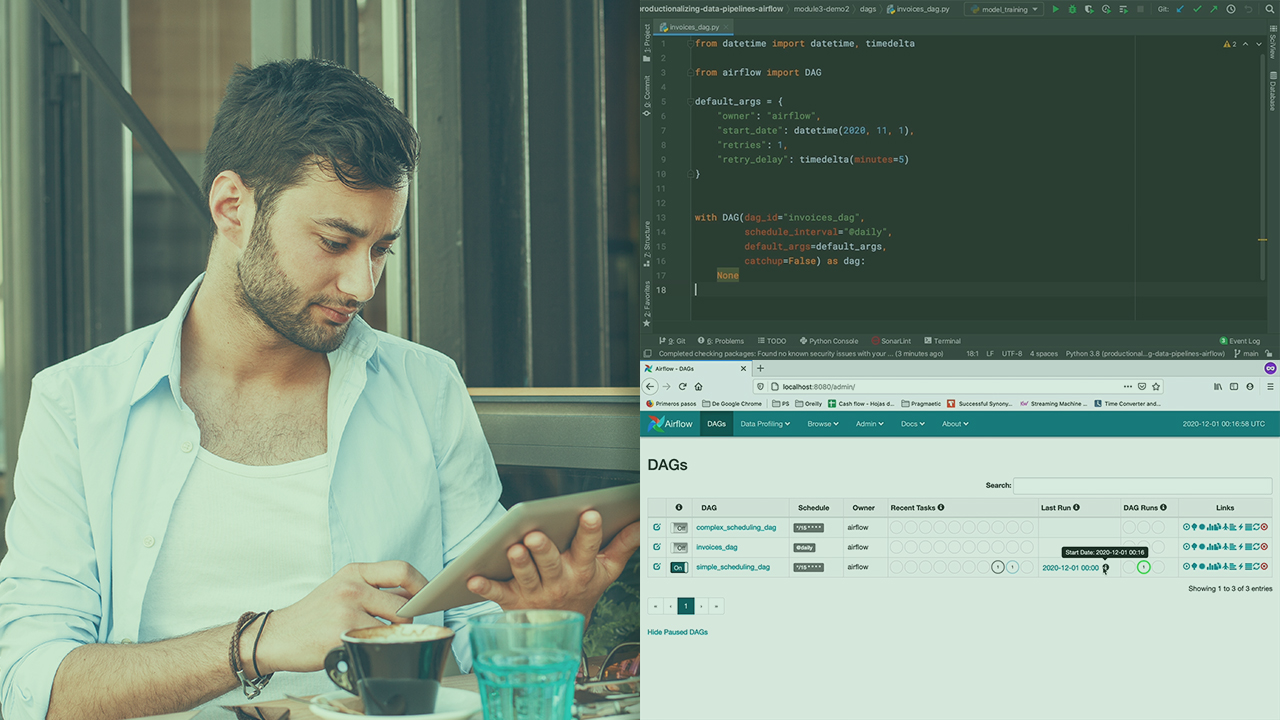

A DAG is only concerned with how to execute tasks and not what happens during any particular task. Each DAG is written in Python and stored in the /dags folder in the Apache Airflow installation. In Apache Airflow, a DAG is a graph where the nodes represent tasks. In programming, a DAG can be used as a mathematical abstraction of a data pipeline, defining a sequence of execution stages, or nodes, in a non-recurring algorithm. In graph theory, a Directed Acyclic Graph (DAG) is a conceptual representation of a series of activities.

This is exactly where Apache Airflow comes in. What happens if one part of the pipeline fails? Should the next tasks execute and can you easily rerun the failing tasks? Perhaps the API response needs to be sent to a client or a different internal application. Imagine you need to run a SQL query daily in a data warehouse and use the results to call a third-party Application Programming Interface (API). What use cases are best for Apache Airflow You can integrate a different tool into a pipeline, such as Apache Spark, if you need to process data.

0 kommentar(er)

0 kommentar(er)